In the hallowed courts of Wimbledon, where tradition often reigns supreme, a subtle yet profound shift is underway. The familiar calls of human line judges are increasingly being replaced by the crisp, disembodied pronouncements of artificial intelligence. While heralded by some as the dawn of an era of perfect calls and undisputed accuracy, this technological leap, exemplified by tennis’s “robot umps,” offers a compelling and perhaps unsettling glimpse into a future where AI’s presence in our lives extends far beyond the sporting arena.

The transition, while promising precision, also exposes a disconcerting reality: AI, like any technology, is not infallible. Its errors, however rare, are delivered with an unyielding authority that brooks no argument, mirroring challenges we increasingly face in daily interactions with automated systems. This article delves into the implications of AI officiating in sports, exploring its benefits, its often-overlooked drawbacks, and the broader societal trends it foreshadows.

THE ASCENSION OF ARTIFICIAL INTELLIGENCE IN SPORTS

Sports officiating has historically been a domain of human judgment, fraught with the drama of contentious calls, emotional appeals, and the occasional, albeit legendary, outburst. From the umpire’s discerning eye in baseball to the referee’s whistle on the pitch, the human element has been integral to the narrative of competition. However, the relentless pursuit of fairness, coupled with technological advancements, has propelled a new paradigm.

The catalyst for this shift in tennis was undoubtedly the Hawk-Eye system. Introduced initially as a review mechanism, Hawk-Eye transformed officiating by providing an objective, visual confirmation of ball placement. Its brilliance lay not just in its accuracy, but in its ability to transform a moment of human dispute into a captivating on-screen spectacle. This innovation made electronic officiating palatable, transitioning the entertainment from a heated human confrontation to an equally engaging, albeit digital, re-enactment. It was, for a time, an optimal human-computer hybrid, allowing players to challenge calls while retaining the human official’s initial judgment.

However, the desire for absolute perfection, coupled with the ever-present drive for efficiency and cost reduction, pushed the boundaries further. The complete automation of line calls became the next logical step. The promise was clear: eliminate human error, biases, and the potential for perceived injustice. Yet, as Wimbledon and other tournaments embracing full AI officiating are discovering, the reality is far more complex than the promise.

THE CASE OF WIMBLEDON AND ITS ROBOT UMPS

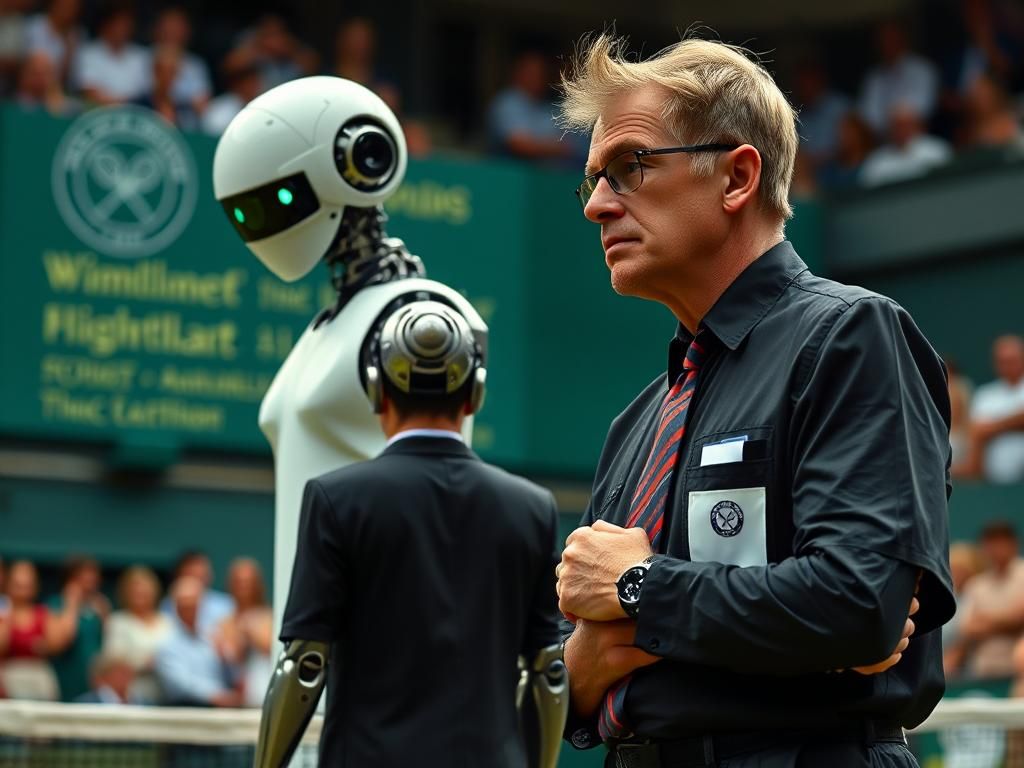

Wimbledon, a bastion of tennis tradition, has notably embraced AI line judges, signaling a significant departure from its past. Spectators now frequently hear an abrupt “OUT” call from an unseen voice, often leaving a moment of curious silence or a ripple of tittering through the crowd. While often correct, these calls sometimes provoke skepticism, even from the athletes themselves.

Britain’s prominent tennis stars, Emma Raducanu and Jack Draper, have voiced their frustrations, claiming the AI system played a role in their losses. Raducanu explicitly stated her hope that “they can kind of fix that.” This sentiment, however, met with little sympathy from the All England Club. Debbie Jevans, the club’s chair, retorted to the BBC, highlighting the irony: “It’s funny, because when we did have linesman, we were constantly asked why we didn’t have electronic line calling because it’s more accurate than the rest of the tour.” Her statement underscores the inherent tension between the pursuit of accuracy and the experience of its implementation.

A particularly telling incident occurred when a ball hit by local favorite Sonay Kartal, which clearly landed long, was not called out by the system. It was later revealed that the system “wasn’t on” for that particular point. The human umpire decided to replay the point, much to the chagrin of Kartal’s opponent, Anastasia Pavlyuchenkova, who later complained to the umpire, “They stole a game from me.” While Pavlyuchenkova ultimately won the match, the incident served as a stark reminder of AI’s fallibility and the unexpected complications that arise when human oversight, or its absence, collides with automated systems.

THE FLAWED PROMISE OF AI: ACCURACY VERSUS ACCOUNTABILITY

The core paradox of AI officiating lies in its supposed infallibility. It is deployed under the premise that it is 100 percent accurate, yet real-world applications frequently reveal otherwise. The problem isn’t just the occasional error; it’s the unyielding nature of the error. When an AI makes a call, there is no one to argue with. There’s no human line judge to confront, no umpire to plead with, no emotional appeal possible. The decision is presented as fact, immutable and beyond questioning, even when a player’s intuition or visual evidence suggests otherwise.

This experience resonates far beyond the tennis court. Consider the all-too-common scenario of grappling with automated customer service. Anyone who has spent exasperating hours in a digital loop, arguing with a chatbot about a billing error or a mistaken charge that they know, with absolute certainty, is incorrect, will understand this pain. The inability to reach a human, to explain nuance, or to appeal to reason transforms a simple problem into an insurmountable wall of algorithmic indifference. For those seeking help with such digital quandaries, exploring resources like Free ChatGPT can provide a different kind of AI interaction, often more conversational and helpful than rigid customer service bots.

This frustration highlights a deeper societal issue. Sam Altman, a prominent figure in the AI world, has himself issued warnings. On a recent podcast, he candidly stated, “People have a very high degree of trust in ChatGPT, which is interesting because, like, AI hallucinates. It should be the tech you don’t trust that much.” Altman’s words are a crucial caution against blind faith in AI, underscoring that even its creators recognize its inherent limitations and potential for error. Yet, society often gravitates towards the fastest and most convenient answer, even if it sacrifices correctness or accountability.

In sports, this manifests as a drive for quick decisions, often at the expense of thoroughness or human judgment. The clamor for instant replay systems often cites the desire to avoid lengthy delays, leading to calls for full automation. “Just let a computer do it,” is the common refrain. The consequence is a system that, while quick, is not always right, and crucially, offers no avenue for appeal or human interaction. The very human drama that makes sports so compelling — the tension, the challenge, the interaction between players and officials — is steadily eroded.

BEYOND THE BASELINE: SPORTS AS A PREDICTOR OF THE FUTURE

History shows that sports often serve as a fascinating harbinger of societal trends. From fashion and language to embracing political activism, mental health awareness, and even the pursuit of perpetual youth, what happens in sports frequently finds its way into the broader cultural consciousness. Athletes, as figures of immense public attention, become de facto thought leaders, and their experiences with evolving technologies offer a window into our collective future.

The adoption of AI officiating is no exception. If athletes, often described as “supermen and women,” can be compelled to accept robot oversight, what does this imply for the average person in an office setting or a customer service interaction? If Jack Draper or Emma Raducanu, with their precise perception and intimate knowledge of their sport, cannot effectively challenge an AI’s call, what recourse will an individual have when an algorithm dictates their financial standing, medical treatment, or employment?

This “softening up” effect is potent. By normalizing AI’s unchallenged authority in a highly visible and beloved domain like sports, society at large may become increasingly accustomed to, and accepting of, algorithmic decisions across all facets of life, regardless of their fallibility. The emotional and practical consequences of this acceptance – the reduced avenues for human appeal, the chilling of dissent, and the prioritization of efficiency over equity – are profound.

THE INEVITABLE MARCH OF PROGRESS AND ITS COMPLICATIONS

Once AI integrates itself into the core mechanics of a system, its removal becomes virtually impossible. This is the nature of “progress” in the digital age. Even when profound negative side effects are acknowledged – much like the pervasive criticisms of social media’s impact on mental health – the advice rarely involves eliminating the technology but rather adapting to its presence. There can be no “backsliding” from such advancements.

The future of sports officiating, therefore, appears increasingly clear: a world of robot baseball umpires, AI football referees, and automated judging in various disciplines. The primary challenge for developers and governing bodies then becomes not perfecting the AI, but simulating human involvement just enough to prevent the public from being “too jarred by the absence of humanity.” Tennis, with its lifelike AI voices and continued presence of human umpires and ball kids, has already demonstrated a degree of success in creating this “zombie facsimile” – a system that appears familiar but lacks the essential human element of accountability and the capacity for nuanced judgment.

This trend extends beyond officiating. AI is permeating player analysis, strategy development, and even fan engagement, fundamentally reshaping the experience of sports. While promising optimized performance and enhanced insights, it also contributes to a growing distance from the raw, unpredictable human essence that has historically defined athletic competition. The implicit message is that efficiency, speed, and data-driven outcomes are paramount, even if it means sacrificing human discretion and the rich tapestry of interaction and occasional imperfection that traditionally makes sports so compelling.

NAVIGATING A WORLD GOVERNED BY ALGORITHMS

The lessons gleaned from AI officiating in sports are not confined to courts and fields; they are a microcosm of the challenges inherent in our increasingly algorithm-driven world. The drive for efficiency and automation, while offering tangible benefits in speed and cost, often comes at the expense of human agency, accountability, and the nuanced complexities of reality.

We are moving towards systems that, while purporting to be perfect, are demonstrably capable of error, yet offer no human avenue for redress. This creates a deeply frustrating and disempowering environment, where individuals are left without recourse when algorithms dictate outcomes. The “grim look into our future” isn’t about robots taking over, but about human beings being compelled to accept an automated authority that is sometimes wrong, always unyielding, and ultimately, beyond challenge.

The integration of AI, whether in sports or society at large, compels us to critically examine the trade-offs. Do we truly prioritize convenience and perceived accuracy above all else, even if it means sacrificing human connection, the right to appeal, and the inherent imperfections that paradoxically enrich our experiences? As AI continues its inexorable march into every facet of our lives, the urgency to define the boundaries of its authority and to preserve spaces for human judgment, empathy, and dissent becomes ever more critical. Failing to do so risks building a world that is efficient, productive, but ultimately, less human and “less life.”