MIDJOURNEY UNVEILS BREAKTHROUGH AI VIDEO MODEL: V1 EXPLAINED

THE DAWN OF ACCESSIBLE AI VIDEO GENERATION

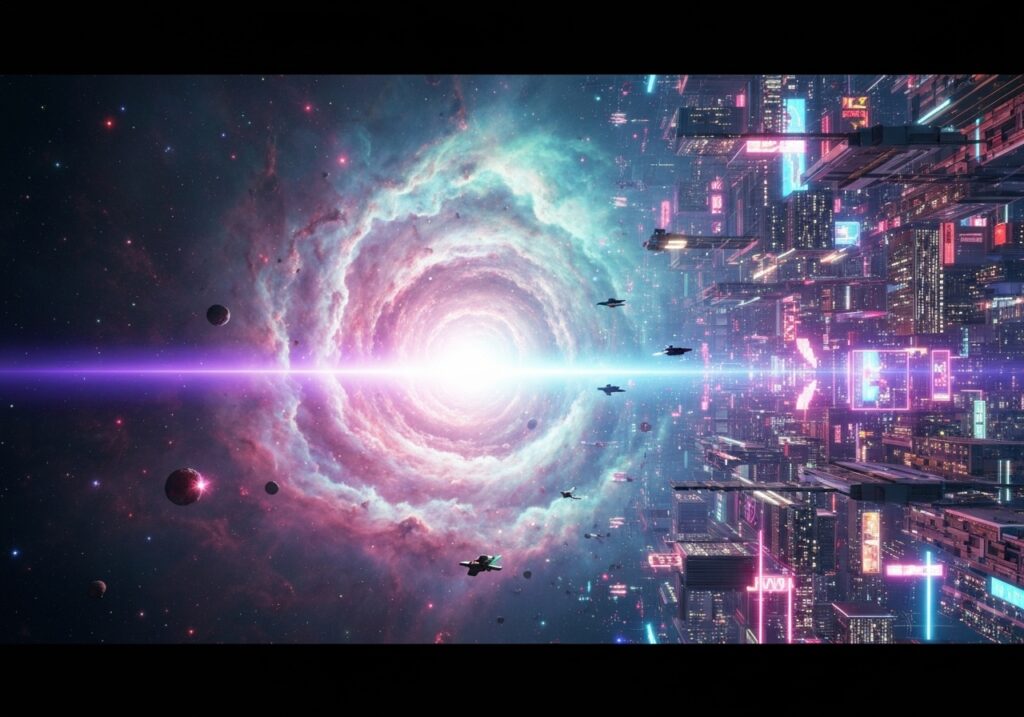

The landscape of artificial intelligence continues to evolve at a breathtaking pace, and the latest innovation from generative image AI powerhouse Midjourney marks a significant leap forward. The company has officially launched its highly anticipated V1 Video Model, a tool poised to democratize AI-powered video creation. This groundbreaking release aims to make the formerly complex and often costly process of generating video from AI prompts more accessible to a wider audience, signaling a new era for digital content creation.

Midjourney, already a dominant force in the text-to-image generation space, strategically positions V1 as a user-friendly and aesthetically pleasing solution. Its introduction has sent ripples through the generative AI community, particularly due to its competitive pricing strategy. While other advanced AI video models have remained largely inaccessible to individual creators due to prohibitive costs or limited availability, Midjourney V1 enters the market with a subscription model designed to appeal to the masses.

UNPACKING MIDJOURNEY V1: FEATURES AND FUNCTIONALITY

At its core, Midjourney’s V1 Video Model leverages the platform’s established prowess in image generation, allowing users to transform their static visuals into dynamic motion. The process is designed to be intuitive, enabling creators to animate their designs with unprecedented ease. This integration ensures a seamless workflow for existing Midjourney users and provides a welcoming entry point for newcomers.

HOW DOES IT WORK?

The operation of Midjourney V1 is remarkably straightforward. Users can begin by generating an image within the Midjourney platform, as they normally would. Once an image is created, a new “Animate” option becomes available, allowing users to convert their chosen static image into a video clip. This animation process can be initiated either automatically, where the AI determines the optimal motion, or manually, giving creators more control over the final output. Users also have the flexibility to select between two distinct motion styles:

- Low Motion: Ideal for subtle, ambient scenes or instances where minimal movement is desired, lending a sense of calm or static continuity to the video.

- High Motion: Suited for more dynamic and action-oriented sequences, injecting significant movement and energy into the generated video.

Furthermore, the V1 model offers a unique “extension” feature, allowing users to prolong their video clips beyond their initial five-second duration. Videos can be extended by approximately four seconds at a time, with the possibility of repeating this process up to four times, cumulatively increasing the video length. This iterative extension capability provides a degree of flexibility for crafting longer narratives or more elaborate visual sequences.

A noteworthy aspect of V1 is its compatibility beyond Midjourney’s internal ecosystem. The model allows users to animate images sourced from outside the Midjourney platform, expanding its utility for a broader range of creative projects. However, it is important to note that, for now, V1 operates exclusively as a web-only application, meaning users must access it through a web browser rather than a dedicated desktop or mobile application.

PRICING AND MARKET COMPETITION

One of the most impactful aspects of Midjourney V1’s launch is its aggressive pricing strategy, which directly challenges the current market leaders in AI video generation. Midjourney has announced V1 will be available for just $10 per month, positioning it as an incredibly attractive option for aspiring creators and hobbyists.

This pricing directly targets the more expensive offerings from competitors. For context:

- OpenAI’s Sora: While highly anticipated for its advanced capabilities, Sora is primarily accessible to ChatGPT Plus and Pro users, costing $20 per month or $200 per month, respectively.

- Google’s Flow (and Veo): Google’s venture into generative AI video comes with a substantial price tag, reported to be around $249 per month for its Flow model, making it a premium solution aimed at professional studios or large enterprises.

- Adobe Firefly: Adobe’s generative AI offering, Firefly, starts at a comparable $9.99 per month but comes with limitations, typically for up to 20 five-second videos, beyond which costs increase.

- Runway’s Gen-4 Turbo: A well-established player in the AI video space, Runway’s Gen-4 Turbo video generation begins at $12 per month, placing it slightly above Midjourney’s introductory rate.

Midjourney’s $10 monthly fee represents a clear declaration of intent: to make high-quality AI video generation genuinely affordable and widely available. However, it’s crucial for users to understand the current cost structure within Midjourney itself. Generating a video with V1 is currently eight times more expensive than generating a static image on the platform. Each video generation job produces four five-second video clips. Midjourney founder David Holz has clarified that while this initial pricing is set, the actual cost structure remains fluid. The team plans to closely monitor V1’s usage over the coming month to inform potential pricing adjustments, indicating a dynamic approach to balancing accessibility with operational costs.

THE LONG-TERM VISION: REAL-TIME OPEN-WORLD SIMULATIONS

David Holz, the visionary behind Midjourney, views V1 not as a final product, but as a crucial “stepping stone” towards a far grander ambition: the creation of “real-time open-world simulations.” This ambitious goal outlines a future where AI can dynamically generate immersive, interactive virtual environments that respond instantly to user input and actions.

Holz articulates that the fundamental building blocks required to achieve such sophisticated simulations include:

- Advanced Image Models: The foundational ability to create highly detailed and coherent static visuals.

- Sophisticated Video Models: The capacity to bring those images to life with realistic and controllable motion.

- Robust 3D Models: The capability to construct three-dimensional environments and objects that can be manipulated and viewed from multiple perspectives.

- Real-time Processing: The critical component of performing all these generations and manipulations with virtually no latency, enabling true interactivity.

Midjourney’s strategy involves developing and releasing these individual models sequentially, each building upon the last, contributing to the ultimate objective. The release of V1 of their Video Model is a clear manifestation of this long-term roadmap, demonstrating the company’s commitment to incremental yet impactful progress towards its ambitious vision for generative AI.

THE ETHICAL IMPLICATIONS AND THE CALL FOR RESPONSIBILITY

As with any powerful new technology, the advent of highly realistic AI video generation brings with it significant ethical considerations and potential societal challenges. Midjourney’s founder, David Holz, has explicitly urged users to employ these new technologies “responsibly.” This plea underscores a growing concern among experts and the public regarding the potential misuse of generative AI.

THE MISINFORMATION THREAT

Perhaps the most immediate and pressing concern is the ease with which AI-generated video can be used to create and disseminate misinformation. As Mashable and other outlets have extensively reported, AI-generated video is rapidly approaching a point where it can be indistinguishable from genuine footage. The recent viral “emotional support kangaroo” video, which was widely believed to be real but later revealed as an AI creation, serves as a stark reminder of how quickly and effectively fabricated content can spread and deceive.

THE RISK OF DEEPFAKES

Beyond general misinformation, the proliferation of AI video technology raises serious alarms about deepfakes, particularly those created for malicious purposes, such as generating explicit non-consensual imagery. This issue has become so severe that it has spurred legislative action, with the creation of explicit deepfakes now designated as a federal crime in the United States.

These concerns highlight the dual nature of AI tools. While they offer immense creative potential, their capacity for deception and harm necessitates a robust framework of ethical guidelines, user education, and, where necessary, legal safeguards. The responsibility falls not only on the creators of these models but also on the users to ensure their creations are used for constructive and ethical purposes.

LEGAL BATTLES AND THE FUTURE OF AI COPYRIGHT

The release of Midjourney V1 occurs against a backdrop of escalating legal challenges concerning the training data used by generative AI models. A significant lawsuit has been filed against Midjourney by major entertainment powerhouses, Disney and Universal. This suit alleges that Midjourney has illegally trained its AI on copyrighted content, referring to the platform as a “bottomless pit of plagiarism.”

This lawsuit is part of a broader legal trend where content creators and rights holders are increasingly challenging AI companies over the unauthorized use of their intellectual property to train AI models. The outcome of such cases could profoundly impact the development and deployment of generative AI, potentially setting precedents for how AI models can acquire and utilize data.

It’s also worth noting the broader industry context: Ziff Davis, Mashable’s parent company, has filed its own lawsuit against OpenAI, alleging copyright infringement in the training and operation of its AI systems. These legal battles underscore a critical juncture in the evolution of AI, where the rapid advancements in technology are colliding with established intellectual property laws, forcing a re-evaluation of digital rights in the age of generative creation.

THE PROMISE AND PATH FORWARD

Despite the ethical challenges and ongoing legal complexities, David Holz remains optimistic about the transformative potential of AI video. He believes that “properly utilized it’s not just fun, it can also be really useful, or even profound — to make old and new worlds suddenly alive.” This perspective highlights the immense positive applications of the technology, from breathing new life into historical archives to creating entirely new forms of artistic expression and storytelling.

Midjourney V1 is a testament to the rapid innovation occurring in the AI space. Its competitive pricing and user-friendly design significantly lower the barrier to entry for AI video creation, enabling a wider demographic of artists, content creators, and hobbyists to experiment with this powerful new medium. As Midjourney continues its journey towards real-time open-world simulations, V1 represents a vital step, offering a glimpse into a future where imagination can be translated into dynamic visual realities with unparalleled speed and ease.

The ongoing evolution of AI video models will undoubtedly bring further advancements, but also continued debates around ethics, copyright, and societal impact. Midjourney’s V1 is not just a new tool; it’s a catalyst in this ongoing conversation, pushing the boundaries of what’s possible while simultaneously demanding thoughtful consideration of its profound implications.