In an increasingly complex global landscape, the information environment has become a new battlefield, particularly during times of geopolitical tension. Recent research highlights a disturbing trend emerging from the ongoing discourse surrounding the Iran-Israel conflict: the pervasive spread of sophisticated, artificially intelligent (AI) generated videos. These fabricated visual narratives are not merely isolated incidents but represent a concerted effort to manipulate public perception, sowing discord and amplifying falsehoods across major social media platforms. As digital technologies advance at an unprecedented pace, so too does the capacity for creating highly convincing, yet entirely fictitious, visual content, challenging the very foundations of trust in online information.

The implications of such misinformation are profound, reaching far beyond the digital realm. They can influence public opinion, potentially exacerbate tensions, and even incite real-world actions. Understanding the mechanisms behind these AI-driven campaigns, recognizing their hallmarks, and developing robust strategies for digital literacy are paramount in an era where distinguishing truth from fabrication becomes an ever-more daunting task.

THE SURGE OF SYNTHETIC MEDIA IN CONFLICT ZONES

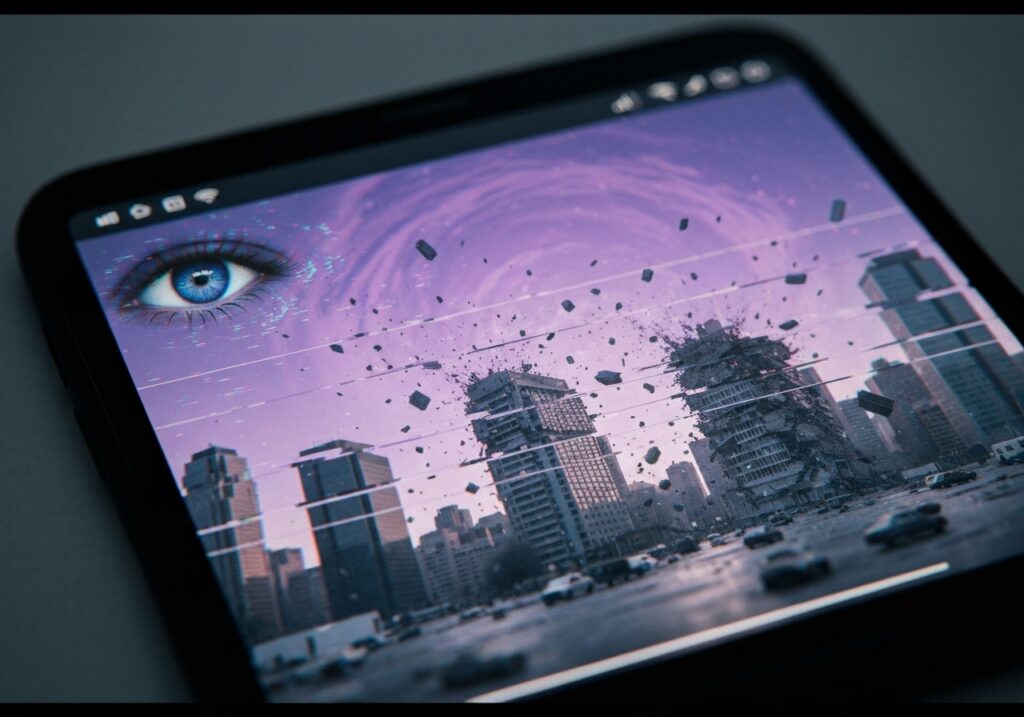

The current Iran-Israel tensions have served as a stark example of how rapidly AI-generated misinformation can proliferate. Researchers have identified numerous instances of synthetic media, primarily video, designed to portray dramatic and often catastrophic scenes that never actually occurred. These include:

- Fabricated Scenes of Destruction: Videos depicting high-rise buildings in Tel Aviv reduced to rubble or an Israeli military aircraft shot down, all entirely fabricated through AI.

- Fictitious Reporting: Clips featuring AI-generated avatars posing as reporters, delivering news from burning prisons in Tehran, lending a veneer of authenticity to false narratives.

- Manipulated Security Footage: Grainy, black-and-white videos purporting to be security camera footage of explosions at sensitive sites like Evin Prison, replete with subtle visual inconsistencies that betray their synthetic origin.

These clips, often indistinguishable from genuine footage to the untrained eye, are designed for maximum impact and virality. They exploit emotional responses, bypassing critical analysis in a fast-paced news cycle. The rapid dissemination across platforms like X (formerly Twitter) and TikTok has allowed some of these videos to garner millions of views, fundamentally shaping the narratives consumed by vast audiences globally.

THE ANATOMY OF AI-GENERATED MISINFORMATION

The sophistication of AI tools available today has dramatically lowered the barrier to entry for creating compelling fake content. What once required advanced technical skills and significant resources can now be achieved with relative ease, using widely accessible software and cloud-based services. Experts like Hany Farid, a professor at the University of California, Berkeley, and co-founder of AI detection startup GetReal Labs, explain that recent advancements have enabled the creation of full-blown video sequences that mimic real-world events, complete with dynamic elements like explosions and seemingly authentic handheld camera effects.

The process often involves an AI image-to-video tool, which can take a still image or a series of images and generate realistic motion and effects. These tools are becoming increasingly adept at simulating lighting, shadows, textures, and even the subtle imperfections of real-world photography and videography, making visual anomalies harder to spot. However, trained eyes and specialized forensic tools can still identify tells such as:

- Inconsistent Details: Discrepancies in signs, logos, or architectural features that don’t match the purported location.

- Unnatural Movements: Subtle glitches, repetitions, or fluidity issues in people or objects that betray their artificial origin.

- Lighting and Shadow Anomalies: Lighting that doesn’t behave realistically or shadows that fall incorrectly based on apparent light sources.

- Distorted Text or Faces: AI-generated text often appears garbled or distorted, and faces, while increasingly realistic, can sometimes exhibit uncanny valley effects or minor deformities upon close inspection.

These subtle imperfections are often missed by casual viewers, especially when content is consumed rapidly on mobile devices with lower resolution displays.

THE DRIVING FORCES: COORDINATED NETWORKS AND GEOPOLITICAL AGENDAS

The spread of AI-generated content is rarely random. Researchers at Clemson University’s Media Forensics Hub have pointed to evidence of coordinated networks actively amplifying this fabricated content. In the context of the Iran-Israel conflict, some of these campaigns appear to be linked to actors promoting Iranian opposition messaging. The primary objective is often to sow doubt and undermine public confidence in the Iranian government, leveraging the emotional intensity of a crisis to push specific political narratives.

Darren Linvill, co-director of the Media Forensics Hub, emphasizes that while the technology itself isn’t entirely new, AI enables these operations to function with unprecedented efficiency. “It isn’t doing anything that one couldn’t do with previous technology,” Linvill stated, “it’s just doing it all cheaper, faster, and at greater scale.” This highlights a critical shift: the democratization of disinformation tools. State-sponsored actors, activist groups, or even individuals can now produce high-quality fake media without the extensive resources traditionally required, making the source of these campaigns increasingly difficult to trace.

The motivation behind such campaigns is multifaceted:

- Destabilization: Creating confusion and fear to destabilize political opponents or rival nations.

- Propaganda: Advancing specific political or ideological agendas by portraying events in a skewed or entirely false light.

- Undermining Trust: Eroding public trust in mainstream media, official sources, and even in the concept of objective truth itself, paving the way for alternative, fabricated realities.

- Polarization: Exacerbating existing societal divisions by appealing to strong emotions and pre-existing biases.

PLATFORM RESPONSES AND THE ONGOING CHALLENGE

Social media platforms find themselves at the forefront of this battle against AI-generated misinformation. Their response strategies vary, yet all face significant challenges in keeping pace with the rapid evolution of AI capabilities. TikTok, for instance, has stated that it prohibits harmful misinformation and AI-generated content that fakes authoritative sources or crisis events, confirming that it has removed some of the videos related to the Iran-Israel conflict. X, on the other hand, often relies on its “Community Notes” feature, a crowdsourced fact-checking system, to add context or warnings to potentially misleading posts.

However, these measures are often reactive rather than proactive. By the time a video is identified, reviewed, and potentially removed or flagged, it may have already accumulated millions of views, leaving a lasting impression on countless users. The sheer volume of content uploaded daily, combined with the increasing sophistication of AI, makes automated detection an immense technical challenge. Furthermore, the global nature of these platforms means that content moderation policies must contend with diverse cultural contexts and legal frameworks, further complicating enforcement.

The debate around platform responsibility continues to intensify. Critics argue that platforms need to invest more in AI detection technologies, implement stricter content policies, and be more transparent about the origin of content, especially during sensitive global events. However, platforms often push back, citing the difficulty of distinguishing between satire, creative expression, and malicious disinformation, while also grappling with concerns about free speech.

NAVIGATING THE AI INFORMATION LANDSCAPE: ADVICE FOR USERS

In an environment saturated with synthetic media, individual media literacy becomes the strongest defense. While experts like Hany Farid bluntly advise, “Stop getting your news from social media, particularly on breaking events like this,” such a blanket statement is often impractical for many who rely on these platforms for information. A more nuanced approach involves developing critical thinking skills and adopting habits that promote responsible information consumption:

- Verify Sources: Always question the origin of dramatic or sensational content. Is it from a reputable news organization? Does the account have a history of sharing accurate information? Check the “About Us” section of unfamiliar sites.

- Cross-Reference: Before accepting any claim as fact, seek corroboration from multiple, diverse, and credible news outlets. If only one source is reporting something extraordinary, be skeptical.

- Look for Anomalies: Train your eye to spot the subtle inconsistencies often present in AI-generated videos: strange blurring, unnatural movements, distorted backgrounds, or text that doesn’t quite make sense. Listen for unusual audio qualities or robotic voices.

- Consider the Context: Is the content designed to evoke a strong emotional reaction? Does it align perfectly with a specific political agenda? Misinformation often plays on fears, anger, or existing biases.

- Be Wary of Speed: Breaking news environments are fertile ground for misinformation. Be cautious of “first reports” or content shared immediately after an event, as these are often unverified or fabricated.

- Utilize Fact-Checking Tools: Leverage established fact-checking organizations and websites (e.g., Snopes, PolitiFact, AP Fact Check, Reuters Fact Check) which often debunk viral misinformation quickly.

- Think Before You Share: Resist the urge to share content that hasn’t been verified. Every share amplifies the message, regardless of its truthfulness.

THE BROADER IMPLICATIONS FOR GEOPOLITICS AND TRUST

The proliferation of AI-generated misinformation, especially during critical geopolitical conflicts, poses a significant threat to global stability and democratic processes. When citizens can no longer distinguish real events from fabricated ones, the foundation of informed decision-making erodes. This can lead to:

- Erosion of Trust: A widespread distrust in institutions, media, and even other citizens, creating a fragmented society.

- Increased Polarization: Deepening divides as individuals retreat into echo chambers, consuming only information that confirms their existing biases.

- Escalation of Conflicts: Fabricated narratives can be used to justify aggression, incite violence, or prolong conflicts by distorting realities on the ground.

- Voter Manipulation: During elections, AI-generated content can be used to spread false narratives about candidates or parties, influencing electoral outcomes.

Addressing this challenge requires a multi-pronged approach involving technological solutions (better AI detection), policy and regulatory frameworks (platform accountability, legal repercussions for spreading disinformation), and educational initiatives (fostering critical thinking and digital literacy from an early age). It’s a continuous arms race where the tools of deception are constantly evolving, requiring equally adaptive defense mechanisms.

CONCLUSION

The conflict in the Middle East serves as a critical case study in the weaponization of artificial intelligence for information warfare. AI-generated videos, designed to mislead and manipulate, are no longer a futuristic concept but a present-day reality with tangible impacts. While social media platforms strive to implement safeguards, the ultimate responsibility for discerning truth rests with the individual user.

In this new digital age, critical inquiry is not just an academic exercise; it is a fundamental skill for civic engagement and personal well-being. By remaining vigilant, questioning what we see, and seeking information from credible and diverse sources, we can collectively build a more resilient information ecosystem and safeguard against the insidious power of AI-fueled falsehoods.