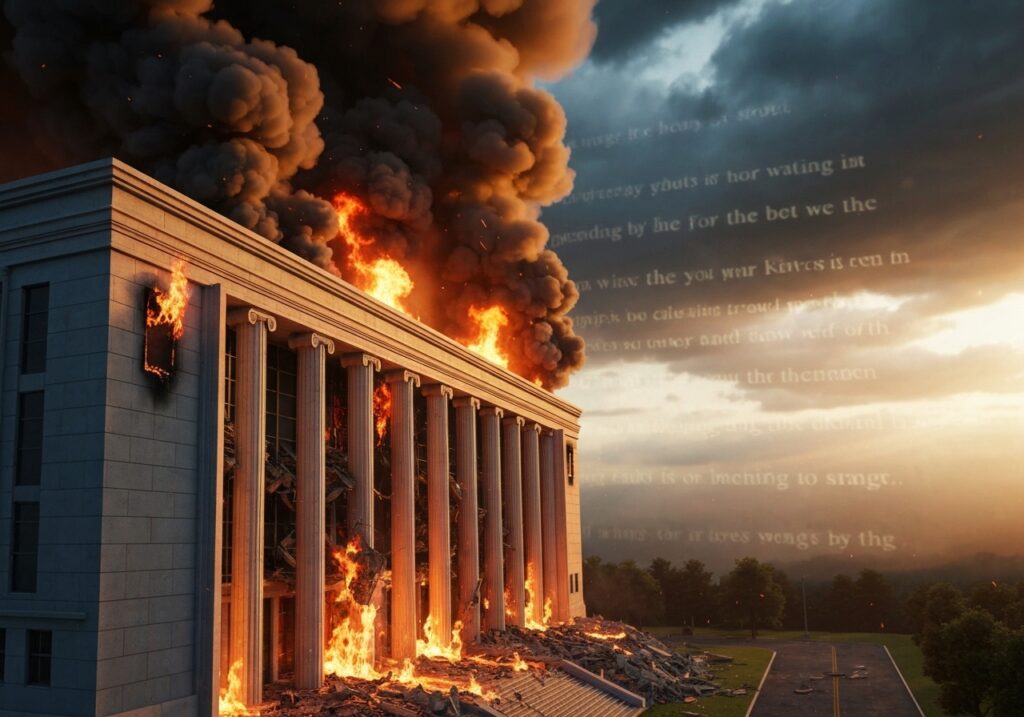

The digital landscape, once a beacon of information and connectivity, has increasingly become a battleground where truth and deception blur. In an era dominated by rapid technological advancement, the proliferation of misinformation has taken on a chilling new dimension through the advent of artificial intelligence (AI) generated imagery. A stark illustration of this perilous trend recently emerged with the false circulation of an AI-generated image purporting to show a “burnt Mossad headquarters.” This incident, while specific, serves as a powerful microcosm of the profound challenges posed by synthetic media in shaping public perception, fueling narratives, and potentially inciting real-world instability.

THE RISE OF DIGITAL MISINFORMATION IN THE AGE OF AI

The incident involving the alleged “burnt Mossad headquarters” image highlights a critical vulnerability in our interconnected world: the ease with which convincing, yet entirely fabricated, visual content can be created and disseminated. Unlike traditional photo manipulation, which often left detectable traces, modern AI tools leverage sophisticated algorithms to generate hyper-realistic images from scratch. These aren’t mere edits; they are entirely new constructions, born from vast datasets and designed to mimic reality with unnerving accuracy. The malicious use of such technology for propaganda, discrediting entities, or creating chaos has become a pressing global concern. This particular image, striking in its visual impact, capitalized on existing geopolitical tensions, making it fertile ground for rapid and uncritical sharing across social media platforms.

THE ANATOMY OF A FAKE: HOW AI IMAGES DECEIVE

Understanding how AI-generated images deceive requires a brief look into the underlying technology. Most high-quality synthetic images today are created using advanced deep learning models, often Generative Adversarial Networks (GANs) or diffusion models. These models learn from immense collections of real-world images, understanding patterns, textures, and structures. A generator component creates images, while a discriminator component tries to distinguish between real and fake images. Through this adversarial process, the generator continually improves its ability to create images so realistic that even the discriminator struggles to tell the difference. The result is synthetic media that can fool the human eye, especially when viewed quickly on a small screen or in a low-resolution format.

The accessibility of user-friendly AI image generation tools has put this powerful technology into the hands of a broader audience, including those with malicious intent. What once required specialized skills in graphic design and photo editing can now be achieved with simple text prompts. This democratization of AI image creation significantly lowers the barrier to entry for misinformation campaigns, making it easier for individuals or state-sponsored actors to produce and spread fabricated content at scale. The perceived authenticity of these images makes them potent vectors for false narratives, as visual evidence often carries more weight than text alone.

THE SPECIFIC CASE: ‘BURNT MOSSAD HEADQUARTERS’ ANALYSIS

The image purporting to show a “burnt Mossad headquarters” was a prime example of a fabricated visual designed to capitalize on sensationalism and specific geopolitical contexts. While specific details of the image’s creation and exact visual tells might vary depending on the particular fake circulated, common characteristics of AI-generated imagery often include subtle inconsistencies that keen eyes or analytical tools can detect. These might involve:

- Illogical Details: Elements that don’t quite make sense in the context of the scene, such as floating objects, non-existent architectural features, or strange light sources.

- Repetitive Patterns or Textures: AI models sometimes generate textures or patterns that are too uniform or repeat in unnatural ways, particularly in areas like rubble, smoke, or brickwork.

- Distorted or Nonsensical Text: Any text within an AI-generated image is a common giveaway, often appearing as gibberish, warped, or inconsistent in style and size.

- Uncanny Valley Effects: While improving, AI can still struggle with complex details like hands, faces (if present), or intricate machinery, resulting in a subtly “off” or unsettling appearance.

- Lack of Consistent Physics: Smoke that doesn’t behave realistically, fire that seems pasted on, or debris that defies gravity can be indicators.

The primary intent behind circulating such an image is almost always to manipulate public opinion, sow discord, or validate pre-existing biases. In the context of a highly sensitive entity like Mossad, a fabricated image of destruction could serve to bolster specific political narratives, incite anti-state sentiment, or simply spread panic and confusion. The rapid debunking of such fakes by reputable fact-checking organizations and official sources is crucial to prevent widespread belief and consequential actions based on false premises.

THE DANGEROUS ECHO CHAMBER: SPREAD AND IMPACT

Once a compelling AI-generated image enters the digital ecosystem, its spread can be alarmingly swift. Social media platforms, with their algorithmic amplification and instant sharing mechanisms, act as accelerators for misinformation. Users, often driven by emotion, confirmation bias, or a desire to be first to share “breaking news,” frequently disseminate content without verifying its authenticity. This creates an echo chamber effect, where false information is repeatedly shared within like-minded communities, reinforcing its perceived credibility. The image of a “burnt Mossad headquarters,” for instance, would likely resonate strongly within communities already predisposed to anti-Israeli sentiments, or those seeking sensational news, regardless of veracity.

The real-world consequences of such digital hoaxes are far from trivial. They can:

- Erode Trust: Consistently encountering fabricated content makes it difficult for the public to discern truth from fiction, leading to a general distrust of all media, including legitimate news sources.

- Fuel Extremism: Misinformation can reinforce extremist ideologies, providing “visual evidence” for false claims and potentially inciting violence or hate speech.

- Impact Geopolitical Relations: False images concerning sensitive national security entities or events can escalate tensions between nations, trigger diplomatic incidents, or even influence policy decisions based on inaccurate information.

- Undermine Social Cohesion: When communities are fed different versions of reality, it becomes harder to have productive discourse, leading to societal fragmentation.

- Cause Financial or Reputational Damage: For targeted entities, debunking widespread fakes can be costly and challenging, causing significant reputational harm.

The speed at which misinformation spreads means that debunking efforts often lag, struggling to catch up with the initial viral surge. This highlights the urgent need for proactive strategies in digital literacy and content verification.

COMBATING THE CONTAGION: STRATEGIES FOR DIGITAL LITERACY

In the face of increasingly sophisticated AI-generated fakes, cultivating strong digital literacy skills is paramount for individuals. It’s no longer enough to be a passive consumer of information; active skepticism and verification are essential.

CRITICAL THINKING AND SKEPTICISM

- Question the Source: Always consider where the image originated. Is it from a reputable news organization, an official government channel, or an unknown social media account? Be wary of anonymous sources or those with a clear agenda.

- Recognize Emotional Manipulation: Misinformation often preys on strong emotions like fear, anger, or outrage. If an image elicits an immediate, intense emotional reaction, pause and verify before reacting or sharing.

- Don’t Share Without Verifying: The impulse to share “breaking news” can be strong, but irresponsible sharing amplifies misinformation. Take a moment to verify before clicking that share button.

VERIFICATION TOOLS AND TECHNIQUES

Several practical tools and techniques can help in verifying images:

- Reverse Image Search: Tools like Google Images, TinEye, or Yandex allow you to upload an image and see where else it has appeared online. This can reveal if the image is old, has been used in a different context, or is associated with a known misinformation campaign.

- Metadata Analysis: While often stripped by social media platforms, some images retain metadata (EXIF data) that can reveal details about the camera used, date, and location. Tools exist to view this if available.

- AI Detection Tools: While still evolving and not foolproof, some AI-powered tools are being developed to identify artifacts indicative of AI generation. These are typically for expert use but are becoming more accessible.

- Cross-Referencing: Check if the alleged event is being reported by multiple, credible, independent news organizations. If only one obscure source is reporting it, especially with a highly sensational image, be skeptical.

- Look for Context: Is there a corresponding news article? Does the visual information align with the textual claim? Discrepancies are red flags.

UNDERSTANDING AI ARTIFACTS

Training your eye to spot common AI tells is an increasingly valuable skill. Beyond the general “uncanny valley” feeling, pay attention to:

- Hands and Fingers: Still a notorious challenge for AI, look for too many or too few fingers, distorted shapes, or unnatural poses.

- Eyes and Teeth: Often appear distorted, asymmetrical, or have an unnatural gleam.

- Background Inconsistencies: Distorted or repetitive patterns in backgrounds, strange blurs, or objects that defy logic.

- Lighting and Shadows: Often appear inconsistent with the supposed light source.

- Reflections: Can be strangely distorted or absent where they should be.

THE ROLE OF PLATFORMS AND POLICYMAKERS

While individual literacy is vital, technology companies and governments also bear significant responsibility in combating the spread of AI-generated misinformation. Social media platforms, as primary conduits for information, need to implement more robust content moderation policies, invest in AI detection technologies, and clearly label AI-generated or manipulated content. Initiatives for digital provenance, such as content authentication certificates or cryptographic watermarks that signal an image’s origin and modifications, are crucial steps towards building trust in online media. Some platforms are already experimenting with AI detectors and “synthetic media” labels.

Policymakers globally are grappling with how to regulate deepfakes and misinformation without infringing on free speech. This involves debates around mandating disclosure for AI-generated content, imposing penalties for malicious dissemination, and fostering international cooperation to track and disrupt disinformation networks. The balance between protecting freedom of expression and safeguarding against harmful content is delicate, but the urgency of the threat demands innovative legal and ethical frameworks.

LOOKING AHEAD: THE EVER-EVOLVING LANDSCAPE OF DECEPTION

The capabilities of AI are advancing at an astonishing pace, meaning that the visual fidelity of synthetic media will only continue to improve, making detection even more challenging. What appears as a “tell” today may be resolved by AI models tomorrow. This necessitates continuous adaptation from fact-checkers, researchers, and the general public. The fight against AI-generated misinformation is not a one-time battle but an ongoing war of attrition, requiring constant vigilance, education, and technological innovation. It also underscores the importance of fostering a culture of verifiable information consumption across all demographics.

CONCLUSION: UPHOLDING TRUTH IN THE DIGITAL AGE

The false circulation of an AI image depicting a “burnt Mossad headquarters” serves as a stark warning of the insidious nature of modern disinformation. It underscores that in the digital age, seeing is no longer necessarily believing. As AI tools become more sophisticated and accessible, the responsibility to critically evaluate the information we consume and share falls on each individual. By embracing digital literacy, utilizing verification tools, and advocating for stronger ethical guidelines and regulations from tech platforms and governments, we can collectively work to uphold the integrity of our information ecosystems. The fight for truth in the digital realm is paramount, for upon it rests the foundation of informed public discourse, societal trust, and global stability.