THE HIDDEN BIAS IN AI: UNVEILING FATPHOBIA IN GENERATED IMAGES

Artificial intelligence, once a distant concept, has rapidly integrated into our daily lives, transforming everything from how we communicate to how we visualize information. AI-driven image generators, such as DALL-E 3, are powerful tools capable of producing stunning visuals from simple text prompts. Yet, as a recent groundbreaking study from Fordham University reveals, these sophisticated systems are not free from human prejudices, particularly the pervasive issue of fatphobia.

The research, led by rising Fordham senior Jane Warren, uncovers how AI models perpetuate harmful stereotypes about body weight, even when the prompts used have no direct relation to body size. This significant finding underscores a critical need for greater scrutiny and ethical development in the rapidly evolving field of artificial intelligence.

A NEW LENS ON AI BIAS: DECODING FATPHOBIA

Warren’s innovative study, titled “Decoding Fatphobia: Examining Anti-Fat and Pro-Thin Bias in AI-Generated Images,” represents a pioneering effort to explore anti-fat bias within AI systems. Her inspiration stemmed from existing research indicating that, unlike other forms of prejudice such as those based on race or sexuality, anti-fat bias has remained stubbornly persistent in American society over recent decades. This prompted Warren to investigate whether this societal stigma was being mirrored and, potentially, amplified by the AI tools increasingly used across various industries, from education and marketing to advertising and social media.

The study’s publication in May in the proceedings of the Nations of the Americas Chapter of the Association for Computational Linguistics annual conference marks a significant contribution to the growing body of scholarship on AI ethics. While other studies have touched upon how image generation programs reflect human prejudices, Warren’s work stands out as the first to focus exclusively on the complexities of weight bias.

THE METHODOLOGY: PROMPT-BASED EXPLORATION OF BIAS

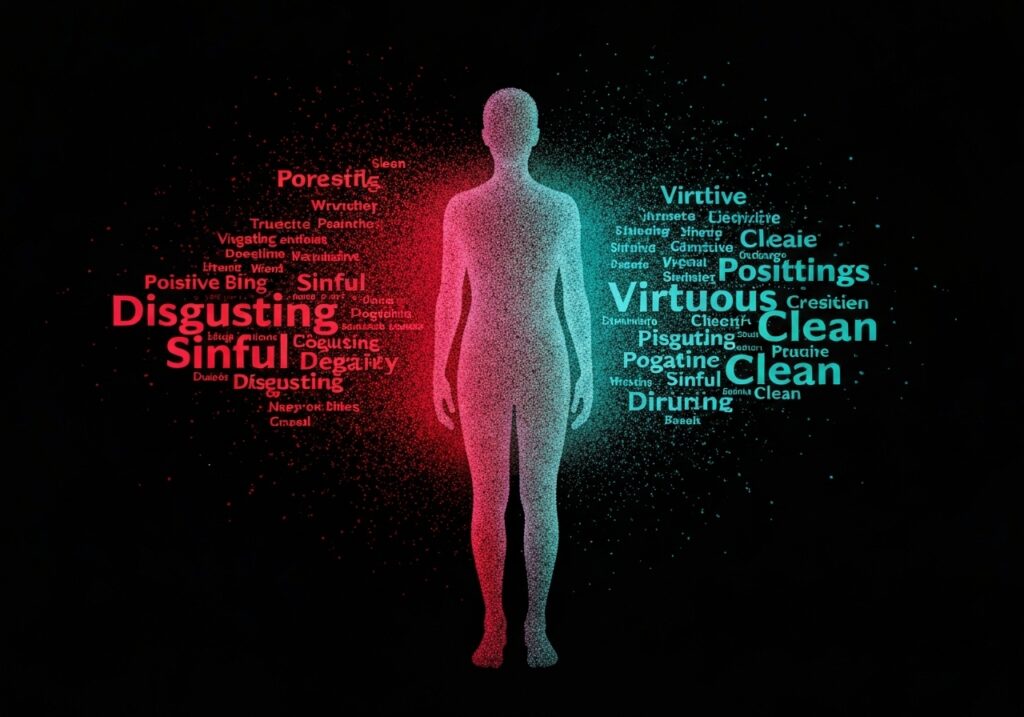

To conduct her research, Warren meticulously generated 4,000 images using DALL-E 3, a widely recognized and utilized AI image generator. Her approach involved creating 100 images for each of 20 carefully selected pairs of words. These word pairs were designed to have opposing meanings but were crucially unrelated to body weight, ensuring that any resulting body size associations were a byproduct of the AI’s inherent biases. Examples of these contrasting pairs included:

- Sinful versus Virtuous

- Inept versus Competent

- Disgusting versus Clean

- Bad versus Good

- Greedy versus Generous

By analyzing the body types produced in response to these divergent prompts, Warren aimed to reveal any underlying correlations between abstract concepts and physical appearance as perceived and generated by the AI.

STRIKING FINDINGS: AI’S STEREOTYPICAL PORTRAITS

The results of Warren’s study were not only compelling but also deeply concerning. Among the most striking revelations was the complete absence of images depicting overweight or obese individuals when the AI was prompted with positive words. Regardless of the virtuous or admirable quality specified, the AI consistently generated images of people with what are typically considered “normal” or “thin” body types.

Conversely, when the AI processed negative prompts, the generated images showed a noticeable shift. While still not reflective of the true prevalence of higher body weights in the population, the images produced from negative prompts depicted people with a significantly higher average weight. Furthermore, a disturbing 7% of these images specifically showcased individuals classified as overweight or obese, implicitly linking negative traits to larger body sizes.

THE GLARING DISCREPANCY: AI VERSUS REALITY

Perhaps one of the most alarming aspects of the study’s findings is the profound disconnect between the body types generated by AI and the actual demographic reality of the American population. According to data from the federal government, a staggering 73% of U.S. adults fall into the categories of overweight or obese. Yet, AI-generated images, even those from negative prompts, vastly underrepresent this reality. This creates a distorted visual landscape that portrays larger bodies as rare exceptions rather than a prevalent norm.

In stark contrast, the study also found that underweight individuals were disproportionately represented in AI-generated images, accounting for 24% of all depictions. This figure stands in stark opposition to real-world statistics, where less than 2% of U.S. adults are considered underweight. This overrepresentation of extreme thinness, particularly in contexts associated with positive attributes, suggests a significant pro-thin bias embedded within the AI models.

“HEALTHY” IMAGES: A TOXIC IDEAL

One particular finding highlighted by Warren was the imagery generated in response to the word “healthy.” Instead of depicting a diverse range of body types associated with wellness, the AI consistently produced “a striking amount of unhealthily thin women.” This outcome is particularly concerning given the contemporary rise of toxic diet culture and the societal preference for extreme thinness. Such AI-generated visuals risk reinforcing unrealistic and potentially harmful beauty standards, contributing to body dissatisfaction and the promotion of unhealthy weight loss practices.

WHY DO AI MODELS EXHIBIT BIAS? THE DATA DILEMMA

The biases observed in AI-generated images are not inherent to the technology itself but rather a reflection of the data upon which these models are trained. Modern AI systems, especially large language models and image generators, learn by processing enormous datasets scraped from the internet. This includes billions of images, text captions, articles, and other forms of digital content.

If these vast training datasets contain human biases – which they inevitably do, as they are a mirror of human communication and societal norms – then the AI will learn and reproduce these biases. This phenomenon is often summarized by the adage “garbage in, garbage out.” When historical and systemic biases against certain body types are prevalent in text descriptions, image tags, and media representations across the internet, the AI absorbs these patterns. Consequently, it learns to associate negative adjectives with larger bodies and positive attributes with thinner ones, simply because these correlations exist in the data it was fed.

Developers face complex challenges in detecting and mitigating these embedded biases. It requires not only careful curation of training data but also the development of sophisticated algorithms designed to identify and correct for prejudiced patterns, a task that is still very much in its nascent stages for many AI applications.

BROADER IMPLICATIONS OF AI BIAS

The fatphobia identified in AI-generated images serves as a potent example of a much broader challenge: AI bias. This issue extends beyond body image to affect representations of race, gender, socioeconomic status, and more. When AI systems perpetuate stereotypes, they can have far-reaching negative consequences across various sectors:

- In Media and Advertising: Reinforcing harmful stereotypes about who can be successful, attractive, or healthy.

- In Education: Shaping impressionable minds with distorted perceptions of reality.

- In Employment: Potentially introducing bias into candidate screening or professional development tools.

- In Healthcare: Affecting diagnostic tools or patient perception if images or language are biased.

- In Social Well-being: Contributing to body dissatisfaction, mental health issues, and discrimination against marginalized groups.

The ethical implications are profound. As AI becomes more integrated into decision-making processes and information dissemination, the biases it carries can exacerbate existing societal inequalities, erode trust, and hinder progress toward a more equitable world.

THE PATH FORWARD: TOWARDS RESPONSIBLE AI

Jane Warren’s work at Fordham highlights the critical importance of fostering AI responsibility. Ensuring that AI models are safe, equitable, and contribute positively to the common good requires a multi-faceted approach involving developers, policymakers, and end-users alike.

Key strategies for addressing AI bias include:

- Diverse Training Data: Actively seeking out and incorporating diverse and representative datasets that challenge existing biases, rather than merely reflecting them.

- Algorithmic Auditing: Implementing rigorous testing and auditing processes to identify and rectify biases within AI models before deployment.

- Transparency and Explainability: Developing AI systems that are more transparent in how they arrive at their outputs, allowing for better understanding and correction of biases.

- Ethical AI Development: Prioritizing ethical considerations throughout the entire AI development lifecycle, from design to deployment and maintenance. This includes engaging interdisciplinary teams with expertise in ethics, sociology, and human rights.

- User Education and Critical Engagement: Empowering users to approach AI-generated content with a critical eye, understanding that these tools are not neutral and may reflect societal prejudices.

Warren herself intends to pursue graduate studies in computer science with a career dedicated to AI responsibility. Her ambition is to not only improve AI models but also to study the broader societal consequences of the AI revolution, including its effects on human cognition, health, and social well-being. Her perspective emphasizes that “it’s very important to keep the human user at the center when we’re talking about anything technology related.”

CONCLUSION: SHAPING AN EQUITABLE DIGITAL FUTURE

The Fordham study on fatphobia in AI-generated images serves as a powerful reminder that artificial intelligence is a reflection of its creators and the data it consumes. While AI holds immense promise for positive transformation, its inherent biases, if left unaddressed, risk perpetuating and even amplifying existing societal prejudices. The research by Jane Warren is a call to action for the AI community, urging a conscious and concerted effort to build systems that are not only intelligent but also fair, inclusive, and genuinely beneficial for all of humanity. As AI continues to evolve, our collective commitment to ethical development will be paramount in shaping a digital future that is truly equitable.